Cybersecurity Snapshot: CSA Offers Guidance on How To Use ChatGPT Securely in Your Org

Check out the Cloud Security Alliance’s white paper on ChatGPT for cyber pros. Plus, the White House’s latest efforts to promote responsible AI. Also, have you thought about vulnerability management for AI systems? In addition, the “godfather of AI” sounds the alarm on AI dangers. And much more!

Dive into six things that are top of mind for the week ending May 5.

1 - CSA unpacks ChatGPT for security folks

Are you a security pro with ChatGPT-induced “exploding head syndrome”? Join the club.

Here’s a common scenario: Your business is eager to use – or maybe is already using – ChatGPT, and the security team is scrambling to figure out what’s ok and not ok for your organization to do with the ultra-popular generative AI chatbot.

The security team must draft and adopt usage policies and guidelines but the daily avalanche of ChatGPT information, along with your company’s urgency to streamline processes with ChatGPT, aren’t helping.

Well, a nifty and free resource that may help was just released by the Cloud Security Alliance (CSA). “Security Implications of ChatGPT,” a 54-page white paper, sidesteps “in the weeds” discussions about AI technology and instead aims to help cybersecurity pros grasp ChatGPT’s capabilities and business impact.

Topics addressed in the white paper include:

- An explanation of what ChatGPT is

- How bad actors can use it to boost their attacks

- How defenders can leverage it for their cybersecurity programs

- How to securely use ChatGPT for business

- Limitations of generative AI

- Potential future developments

To get all the details, read the white paper and check out a slide presentation about it.

For more information about ChatGPT, generative AI and cybersecurity:

- “How to Maintain Cybersecurity as ChatGPT and Generative AI Proliferate” (Acceleration Economy)

- “ChatGPT, the AI Revolution, and the Security, Privacy and Ethical Implications” (SecurityWeek)

- “The Fusion of AI and Cybersecurity Is Here: Are You Ready?” (CompTIA)

- “How Generative AI Is Changing Security Research” (Tenable)

- “The New Risks ChatGPT Poses to Cybersecurity” (Harvard Business Review)

VIDEOS

ChatGPT: Cybersecurity's Savior or Devil? (Security Weekly)

Introduction to the NIST AI Risk Management Framework (NIST)

2 - White House unveils efforts to boost secure AI

As concern intensifies over the potential to abuse ChatGPT, the Biden Administration on Thursday met with tech CEOs at the White House and announced steps to spur development of responsible AI and shield people from potential harm.

Initiatives announced include:

- $140 million to launch seven new National AI Research Institutes to boost AI collaboration across academia, government and the private sector

- Assessments of existing generative AI systems by AI developers such as Google and Microsoft to determine if and how they should be modified for the public good

- New policies to guide how the U.S. government develops, adopts and uses AI systems

“As I shared today with CEOs of companies at the forefront of American AI innovation, the private sector has an ethical, moral, and legal responsibility to ensure the safety and security of their products,” Vice President Harris said in a statement.

CEOs who participated in the meeting included Google’s Sundar Pichai and Microsoft’s Satya Nadella, as well as Sam Altman from OpenAI, maker of ChatGPT.

For more information, read the White House announcement, the statement from Vice President Harris and coverage from The Verge, The New York Times, MarketWatch, the Associated Press and CNBC.

3 - Is AI vulnerability management on your radar screen?

And, yes, more AI: How do you address software vulnerabilities in AI systems? Will traditional vulnerability management (VM) do the trick – or do you need new processes and technologies?

With AI products getting eagerly adopted by organizations, these seem like highly relevant questions for cybersecurity teams. To get up to speed, check out a new report from Stanford University and Georgetown University titled “Adversarial Machine Learning and Cybersecurity: Risks, Challenges, and Legal Implications.”

“The report starts from the premise that AI systems, especially those based on the techniques of machine learning, are remarkably vulnerable to a range of attacks,” UC Berkeley Lecturer Jim Dempsey, one of the report’s authors, wrote in a blog titled “Addressing the Security Risks of AI.”

According to the authors, the 35-page document, which is based on a workshop held last summer, aims to accomplish two main goals:

- Discuss how AI vulnerabilities are different from conventional types of software bugs, as well as the current state of information sharing about them

- Offer recommendations that fall under four high-level categories:

- Extending traditional cybersecurity for AI vulnerabilities

- Improving information sharing, transparency and accountability

- Clarifying AI vulnerabilities’ legal status

- Improving AI security through effective research

“AI vulnerabilities may not map straightforwardly onto the traditional definition of a patch-to-fix cybersecurity vulnerability,” the report reads. “The differences … have generated ambiguity regarding the status of AI vulnerabilities and AI attacks. This in turn poses a series of corporate responsibility and public policy questions.”

For more information:

- “Adversarial AI Attacks Highlight Fundamental Security Issues” (Dark Reading)

- “It's time to harden AI and ML for cybersecurity” (TechTarget)

- “Vulnerability Disclosure and Management for AI/ML Systems” (Stanford University)

- “Securing government against adversarial AI” (Deloitte)

- “Adversarial machine learning explained” (CSO)

- “Adversarial machine learning 101: A new cybersecurity frontier” (Dataconomy)

VIDEOS

Vulnerabilities of Machine Learning Algorithms to Adversarial Attacks (CAE in Cybersecurity Community)

AI/ML Data Poisoning Attacks Explained and Analyzed (RealTime Cyber)

4 – Google’s AI guru quits, warns about AI dangers

And staying on the AI topic, another prominent figure is warning about the potential dangers of releasing AI products that could be misused for nefarious purposes.

This week, Geoffrey Hinton, an AI pioneer, said he resigned from Google so that he can freely voice his concerns about the risk for harm from malicious use of generative AI tools like ChatGPR.

Again, it’s a topic that cybersecurity pros should track closely from various angles: How do you prevent and respond to AI-powered attacks? How do you leverage AI for defense? How do you comply with current and future regulations and laws governing AI use? (See this blog’s first item.)

In an interview with The New York Times, Hinton urged tech companies to slow down and consider how bad actors could use generative AI chatbots to supercharge their efforts to create mayhem – which he fears may be inevitable.

“It is hard to see how you can prevent the bad actors from using it for bad things,” said the 75-year old Hinton, a Turing Award recipient who is often referred to as “the Godfather of AI.”

His worries include that the malicious use of AI chatbots will accelerate the creation of false information, including text and media, that will appear legit to the average person, creating widespread confusion about what’s real.

He echoes sentiments expressed repeatedly in recent months since the release of ChatGPT by concerned governments, organizations and individuals, including the more than 1,000 tech experts who signed a letter asking for an AI moratorium in March.

For more information about cybersecurity and generative AI, check out these Tenable resources:

- “Six Things to Know About ChatGPT and Cybersecurity”

- “How Tenable harnesses AI to streamline research activities”

- “As ChatGPT Concerns Mount, U.S. Govt Ponders Artificial Intelligence Regulations”

- “As ChatGPT Fire Rages, NIST Issues AI Security Guidance”

- “ChatGPT Use Can Lead to Data Privacy Violations”

VIDEOS

How Generative AI is Changing Security Research

GPT-4 and ChatGPT Used as Lure in Phishing Scams Promoting Fake OpenAI Tokens

5 - CISA floats draft of “security attestation” form for software vendors

What questions should you be asking of your software vendors to ensure their products are secure? You might get good ideas from a draft questionnaire CISA has released so that the public can comment on it.

Called the “Secure Software Self-Attestation Common Form,” the document includes, for example, questions to determine whether a vendor:

- Developed its software in a secure environment

- Made a “good faith effort” to ensure the security of its supply chains

- Knows where all of its software’s components came from

- Checked for software vulnerabilities using an automated tool

After it’s approved in final form, this security attestation form will be the one that software vendors will need to submit to the U.S. federal agencies to which they sell software.

If you wish to comment on the draft form, you have until June 26 to do so.

For more information:

- “GSA to start collecting letters of attestation from software vendors in mid-June” (FedScoop)

- “US government software suppliers must attest their solutions are secure” (Help Net Security)

- “Federal Vendors Given a Year to Craft SBOM To Guarantee Secure Software Development” (CPO Magazine)

- “5 Key Questions When Evaluating Software Supply Chain Security” (Dark Reading)

- “How To Assess the Cybersecurity Preparedness of IT Service Providers and MSPs” (Tenable)

VIDEOS

The challenges of securing the federal software supply chain (SC Media)

New Guidelines for Enhancing Software Supply Chain Security Under EO 14028 (RSA Conference)

6 – Here’s the top malware for Q1 2023

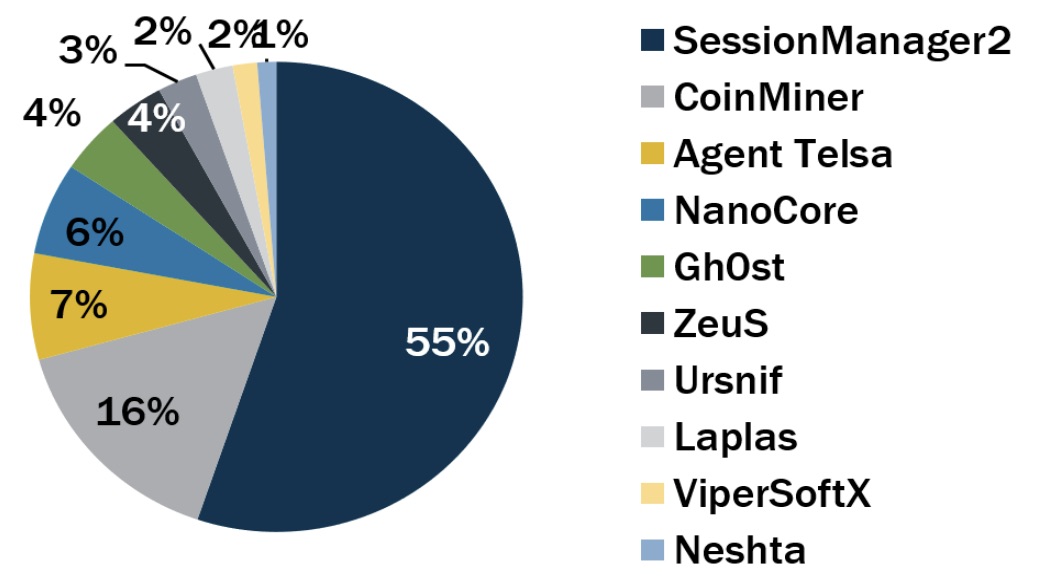

The Center for Internet Security (CIS) has ranked the most prevalent malware strains in the first quarter of 2023, with many usual suspects making a repeat appearance, and newcomers Laplas, Netshta, and ViperSoftX cracking the list.

Here are the rankings, in descending order and based on malware incidents detected by the Multi-State Information Sharing and Analysis Center (MS-ISAC):

- SessionManager2, a malicious Microsoft IIS server backdoor that gives attackers persistent, update-resistant and stealthy access to compromised infrastructure

- CoinMiner, a cryptocurrency miner that spreads using Windows Management Instrumentation (WMI) and EternalBlue

- Agent Tesla, a remote access trojan (RAT) that captures credentials, keystrokes and screenshots

- NanoCore, a RAT that spreads via malspam as a malicious Excel spreadsheet

- Gh0st, a RAT for creating backdoors to control endpoints

- ZeuS, a modular banking trojan that uses keystroke logging

- Ursnif (aka Gozi and Dreambot), a banking trojan

- Laplas, a clipper malware currently being spread by SmokeLoader downloader via phishing emails with malicious documents

- ViperSoftX, a multi-stage cryptocurrency stealer that spreads within torrents and filesharing sites

- Netshta, a file infector and info stealer that spreads via phishing and removable media, and targets executables, network shares and storage devices

Top Malware for Q1 2023

To get all the details, context and indicators of compromise for each malware, read the CIS report.

For more information about malware trends:

- “Malware statistics and facts for 2023” (Comparitech)

- “10 common types of malware attacks and how to prevent them” (TechTarget)

- “3CX Desktop App for Windows and macOS Reportedly Compromised in Supply Chain Attack” (Tenable)

- “Ransomware trends, statistics and facts in 2023” (TechTarget)

- “7 Most Common Types of Malware” (CompTIA)

- Center for Internet Security (CIS)

- Cloud

- Cybersecurity Snapshot

- Executive Management

- Federal

- Government

- Vulnerability Management